DIY Terraform Cloud, Part 1 - The Game Plan

What would it take to migrate away from Terraform Cloud? After Hashicorp moved to resource-based pricing and BSL license last year, this question is on the roadmaps of many infrastructure teams. This article outlines the scope of such a migration.

In this article I will describe the main aspects of Terraform Cloud and what the DIY equivalents for them might look like at the high level, pretending that ready-made tools for that do not exist (they do in fact; I am building one). This will hopefully allow the reader to make well-informed decisions on whether or not to commit to a migration and how long it might take.

What exactly is Terraform Cloud?

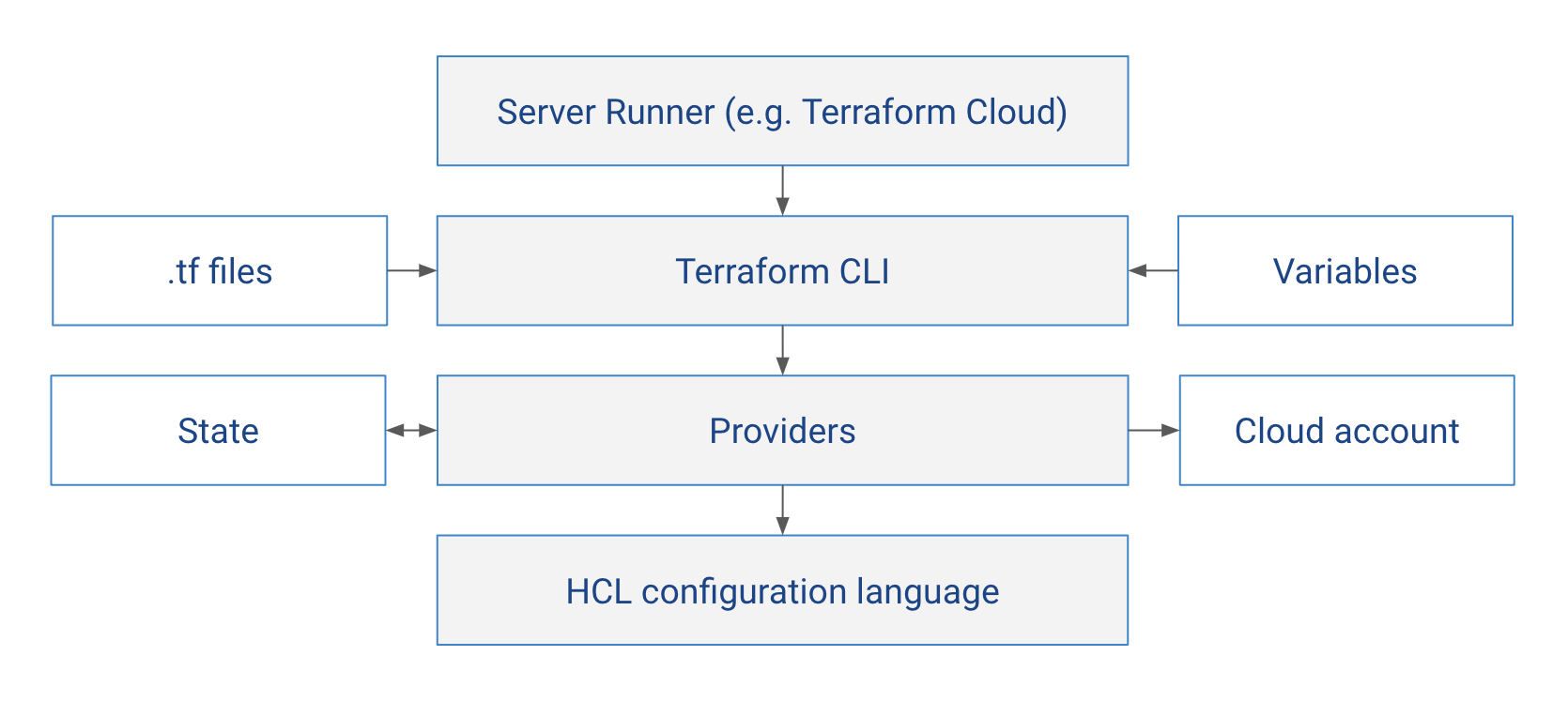

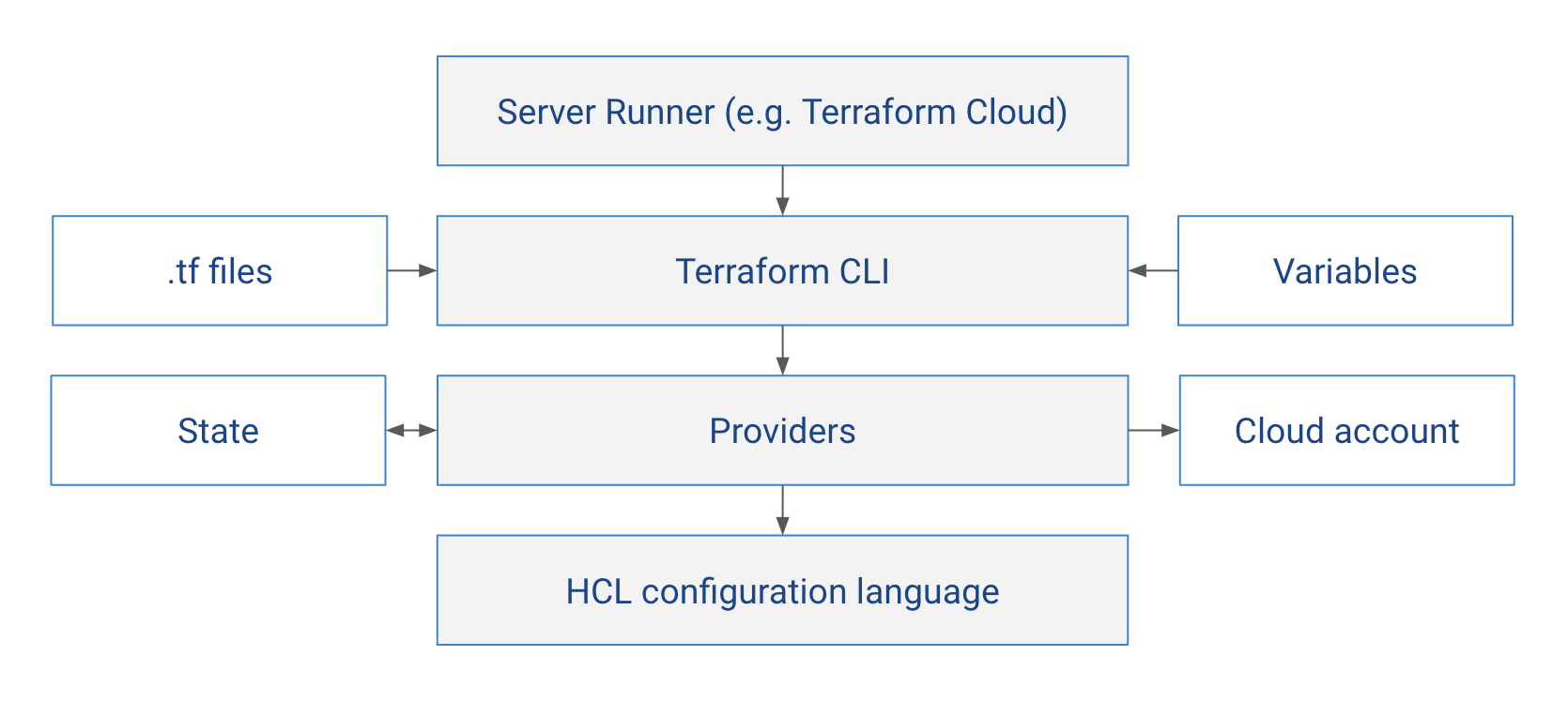

On Hashicorp’s website Terraform is presented as a singular product, inseparable from the Cloud offering. While the business reasoning behind this framing is understandable, it is not an accurate representation of reality. It helps to look at Terraform as a stack of layers, each depending upon the previous one:

- Terraform itself is a CLI that can run anywhere - including your laptop or your CI/CD platform.

- The CLI takes the .tf code files that describe the desired configuration, .tfvars as well as environment variables and passes them onto provider

- The Provider know how to translate resource definitions into API calls of a target cloud like AWS, GCP or Azure. It makes those calls to read and update the actual configuration and makes changes to state.

- HCL configuration language defines the syntax of .tf files that providers read resource definitions from. The language is general-purpose though, not concerned with cloud infrastructure - you can think of it as more readable json

Terraform cloud is the topmost block. You can think of it as a “server runtime environment” for the Terraform CLI. It knows which variables and arguments to pass to the CLI and runs plan and apply jobs, like a specialist CI/CD system for Terraform.

Features of Terraform Cloud

To migrate an existing production setup from Terraform Cloud, we’d need to understand which aspects of it need to be replaced, and what the replacement could be. So let’s first list the main features of Terraform Cloud to get a sense of scope:

- Remote state - state is stored on the server, so that there is a single source of truth matching cloud configurations

- Remote execution of plan / apply - provides a consistent runtime environment for Terraform CLI witch centralised access control

- Preview and approval of plans - allows you to see what changes are being proposed, before the pull request is merged; manually approved if needed

- Variable sets - so that same .tf configuration can be instantiated with different parameters

- Private registry - to keep some of your internal modules private to your organisation

- Policy sets - to ensure that unwanted changes don’t get made

Migrating away from TFC then means finding an alternative solution for each of these features, and repeating that for all your projects. Let’s cover those one by one.

Remote state: use S3 backend

Luckily, Terraform supports S3 backend natively - all you need to do is create an S3 bucket and update your backend configuration. You can even define that bucket itself via Terraform (example configuration). Some considerations:

- make sure to enable versioning in the bucket - you’d want to keep all past versions of the state for debugging purposes

- you can have multiple states in one bucket (controlled by the

keyattribute in backend configuration). so you can have one state backend for your production environment storing all production states, one for staging, and so on. In a bigger organisation it’ll probably be multiple state buckets per environment organised based on “domains” or “departments”, but still not as many as state files - it doesn’t have to be S3 from AWS - you can use any S3-compatible object storage, like Cloudflare R2 (link)

To migrate state from TFC to S3, there are 2 main methods:

- run

terraform init -migrate-stateafter updating the backend configuration - or go fully manual:

terraform state pull > my.tfstate, thenterraform state push

Remote execution: write a CI pipeline

There is no single right way to design a CI/CD pipeline; and for Terraform it is particularly tricky because of state. It is also highly dependent on your application’s architecture, the way you split your infrastructure into different state files, and so on. But one thing remains common in any CI implementation: stages. You’d want some actions (like plan) to run first, and only perform others (like apply) if prior stages are successful.

One way would to build an IaC pipeline would be to follow the same model as application pipelines do. Plan stage is then the equivalent of build, producing an artifact (that can be stored); and apply is akin to deployment, taking the plan artifact and provisioning changes.

There is one difference however: the concept of environment. With applications, you make a change and want it promoted the exact same way across all environments. But Terraform is the environment (it literally describes the infrastructure). Most teams have a dedicated terraform configuration for each environment like dev, staging, prod etc. So our pipeline will look differently:

- NOT this: validate → deploy to dev → deploy to staging → deploy to prod

- Rather smth like this: validate → plan → apply, separately for each environment

One non-obvious thing to consider: rebasing on top of the main branch. If someone else made some other changes while the PR is open, merged and applied them, then planning from a branch will attempt to undo the changes (delete the resources). So you always want to plan as if the PR was merged on top of the latest changes.

A pipeline like that can be built in any CI platform - Github Actions or Gitlab or Azure DevOps or even Jenkins. For the rest of the article we’ll focus on Github Actions.

Plan preview: comment on the pull request

If we trigger our pipeline upon merge to the main branch, then plan will only run after the changes are merged. This is not ideal, ideally you’d want to see the plan output to decide whether or not to merge the changes.

We can change our pipeline a bit: instead of planning after merge, we can run plan before merging for every pull request. In Github Actions, that’d mean create a separate workflow file that will run terrafrom plan on pull_request event. It’s output can be store output in a file

Then as a next step in the pipeline you can append a comment to the PR from inside the job

- Read read from the file created in the previous step

- Use the official github script action to add a comment to the PR

Plan approval: use Environment Protection Rules

For mission-critical parts of the stack like production environment infrastructure, you’d often want explicit approval before every apply. This can be achieved using the Environments feature in GitHub Actions

- Under 'Environment protection rules', enable 'Required reviewers'

This way the apply workflow for staging will run automatically on merge; but for production, it will wait for an explicit approval. The approver will see a “pending approval” job in the Actions tab.

Variable Sets: use Action Secrets and .tfvars files

In Terraform Cloud, Variable Sets support Terraform Variables or Environment Variables. In addition, you can mark some of them as sensitive - effectively secrets.

To replace this functionality, we can use 2 native features of Terraform:

- Non-sensitive variables can be stored in .tfvars files, and safely pushed to to git. They will be automatically picked up by terraform CLI as inputs.

- Sensitive values can be stored as Action Secrets (again the Environments feature of Github Actions comes handy). You can prefix variable names with

TF_VAR_so that the Terraform CLI recognises them as inputs.

Policy sets: use OPA and / or Checkov

Terraform Cloud originally came with Sentinel, a policy-as-code language by Hashicorp that did not gain much popularity. Recently Hashicorp added support for Open Policy Agent policies too. Policy checks naturally belong in the CI pipeline as well, preferably as early as possible to stay true to the “shift left” principle. There are two leading tools in the space:

- Checkov is a policy-as-code tool that designed specifically for infrastructure-as-code frameworks, including terraform. You can run it as a standalone step in your CI pipeline (docs)

- Open Policy Agent is a general-purpose policy-as-code tool that can be used to describe any policy for anything. It is more powerful, more flexible, but also has a steeper learning curve and will require more upfront work to write the policies to check your Terraform code. (docs) You can also run OPA as an action.

Private registry

A private registry is benefitial when the infrastructure is large enough to have an internal ecosystem of modules re-used by multiple teams that are not necessarily aware of one another’s work. You can either deploy one of the open-source terraform-specific registries, or use a general purpose commercial one:

- Open-source registries designed specifically for Terraform: boring registry, terralist, tapir

- General-purpose private registries like JFrog Artifactory also support terraform modules

Conclusion

Migrating away from Terraform Cloud, while certainly not trivial, is not as hard as it sounds. We’ve covered the high-level considerations to replace each feature of Terraform cloud:

- Remote state

- Remote execution of plan / apply

- Preview and approval of plans

- Variable sets

- Policy sets

- Private registry

Each feature probably warrants a dedicated article for detailed implementation; but the overall scope of work is clear. Other than these 6 things, there is not much else to do to move away from TFC. This seems doable even if done completely from scratch, not using any ready-made tools for the job. Hopefully this gives you a ballpark sense of effort required to achieve it in your team and makes it a bit easier to decide whether or not to commit to a migration.

If you find this useful and would like to see follow-up articles in the series that go into each aspect of the migration in depts, please share the article and let us know which ones you'd like covered next!

Digger is an Open Source Infrastructure as Code management platform for Terraform and OpenTofu. Join our Slack community.

Edit (Wednesday, 3rd December 2025): The Digger Project has been renamed to OpenTaco! More details on the OpenTaco site